|

PAPERS

Teaching

and Researching Higher-Order Thinking in a Virtual Environment

Joseph J.

Pear

University of Manitoba

Abstract

An innovation in studying

the teaching and learning process has been developed

at the University of Manitoba. Computer-Aided Personalized

System of Instruction (CAPSI) targets questions or problems

within small units of study material to initiate composed

rather than option-based responses. This system integrates

peer review with evaluation by instructor and teaching

assistants. Current research focuses on increasing thinking

levels by students in courses using CAPSI.

Introduction

The Keller (1968) personalized system of instruction

(PSI) is a method of self-paced learning in which students

proceed through course material at their own pace by

writing unit assignments on study questions or problems

given to the students beforehand. Other students act

as reviewers or tutors by giving feedback on the unit

assignments. PSI is a mastery system since students

demonstrate mastery on a given unit before they can

proceed to the next unit. Research has shown that mastery

learning in general and the Keller system in particular

produce superior learning (Kulik, Kukik and Bangert-Drowns,

1990).

Bloom's (1956) taxonomy

in the cognitive domain is a system for categorizing

the thinking levels required by specific questions,

problems, or exercises. Bloom identified six major categories

of thinking: (1) knowledge, (2) comprehension, (3) application,

(4) analysis, (5) synthesis, and (6) evaluation. These

categories are roughly hierarchical. For example, to

be able to creatively put together several basic concepts

to create a new idea (level 5), one must have a good

understanding or comprehension (level 2) of those basic

concepts. Although Bloom’s taxonomy is not the

only possible way to classify thinking levels, it is

widely known and used in education, and therefore provides

a good starting point for teaching higher-order thinking

and studying its development. This paper describes a

method for teaching and studying the teaching and learning

process, called computer-aided personalized system of

instruction (CAPSI; Pear & Crone-Todd, 1999), that

combines Keller's PSI and Bloom's taxonomy. Combining

the Keller system with Bloom’s taxonomy presented

some problems that required a technological solution.

First, the Keller system requires a great deal of routine

administrative work to maintain. Second, adding the

thinking-level dimension increases the administrative

work required. By automating repetitive tasks, computer

technology increases the efficiency of the process.

Perhaps even more importantly, computer technology makes

it possible to study the process in a comprehensive

systematic manner.

The

Method

As originally developed by

Keller, PSI uses students in a more advanced course as reviewers

of assignments by students in less advanced courses. This

made sense administratively, because the more advanced course

provided a source of individuals who had presumably mastered

the material in the less advanced course. With computer

technology, however, a more advanced course is unnecessary.

Each student's position in the course is available instantaneously.

This enables the CAPSI program to use students in the same

course as peer reviewers. An added benefit of using computer

technology is that students do not have to be at one specific

location at one specific time. CAPSI-taught courses at the

University of Manitoba are conducted through the Internet.

An important feature of CAPSI is its quality control

potential. In courses at the University of Manitoba, the

program requires that a unit assignment be marked by the

instructor or teaching assistant or by two peer reviewers.

If two peer reviewers mark it, both must independently agree

that the assignment is a pass in order for the program to

record it as a pass. In addition, all assignments are automatically

recorded to disc for the instructor to sample and evaluate.

There is also a built-in appeal process for arguing the

validity of a given answer. The program is applicable

to any course topic and any set of questions or problems.

The instructor inputs questions or problems and certain

parameters, such as the number of units in the course, the

course credit for each unit assignment, the course credit

for peer reviewing, and whether there are to be examinations

or projects in the course and their respective course credits.

The program then automates all the administrative functions

of the course. Thus, the study material (e.g., text, videos,

lectures) along with the questions, exercises, or problems

selected or generated by the instructor form the basis or

core of the system. The type of learning that students can

acquire from the course will be highly dependent on this

core. If the instructor writes questions that require only

rote learning (level 1, or knowledge, in Bloom's taxonomy),

for example, students will be unlikely to advance above

the rote level. For this reason CAPSI is designed for constructed

or composed solutions or answers rather than option-based

(e.g., true-false, multiple choice) responses. However,

a method for ensuring that students would learn and interact

with the material at the highest possible level of thinking

was still needed. Hence, a modified form of Bloom's taxonomy

was integrated into the system. There were several

reasons for modifying the taxonomy. One is that there are

reliability problems with the taxonomy (e.g, Kotte &

Schuster, 1990). Another is the complexity of the taxonomy,

which makes it difficult to apply. Of course, one would

expect a classification of thinking levels to be complex.

However, it seemed better to simplify the taxonomy and make

it more reliable for the purpose of integrating it with

CAPSI. It is anticipated that refinement and elaboration

of this modified taxonomy will result from research on its

use within the CAPSI program.

The taxonomy

as currently used with CAPSI is as follows:

-

Rote

knowledge: the answer is word-for-word or closely

paraphrased from the study material.

-

Comprehension:

the answer is in the student’s own words.

-

Application:

a concept is applied to a new problem or situation.

Examples would be illustrating a concept with a new

example (e.g., one not in the study material) and

applying an equation to a new problem.

-

Analysis:

breaking down a concept into its parts. This occurs

when, for example, one compares and contrasts two

or more concepts.

-

Synthesis:

integrating two or more concepts to form something

new. An example would be combining several styles

of painting to produce a new style.

-

Evaluation:

providing reasoned argument for or against a given

position. An example would be an argument considering

the pros and cons of cloning research from an ethical

perspective or from a scientific perspective.

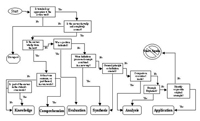

Flowcharts were constructed

constructed that permitted the thinking levels of both questions

and answers to be assessed with good reliability (Crone-Todd,

Pear, & Read, 2000; Pear, Crone-Todd, Wirth, & Simister,

in press). This has set the stage for research on ways to

raise the level of thinking at which students respond to questions

in CAPSI-taught courses.

Research Issues

The first foray into investigating

increasing thinking levels in student answers consisted

in providing students with the modified taxonomy, the thinking

level required by each question, and a system of bonus points

for each question answered above the level outlined (Crone-Todd,

2001). For example, if a question asked for an example of

a concept without specifying that the example had to be

original (i.e., not in the study material), this question

would be considered to be at level 2. Thus, if a student

gave an original example this would be answering at level

3, and therefore would be answering above the level of the

question. This procedure successfully increased the levels

at which students answered the questions. This shows that

students are able to increase their demonstrated thinking

levels. Using CAPSI as an instrument for probing

students’ thinking levels in a course, we are in a

position to study variables thought to be important in helping

students advance their thinking levels. For example, we

might use CAPSI to examine whether some study materials

and media are more effective at promoting higher-order thinking

than others are. Research issues that can be examine include:

Are textbooks that are written in a manner that initiates

thinking or leads the reader through the discovery process

more effective in facilitating higher-order thinking than

those that present a comprehensive coverage of factual material?

Are lectures or discussion groups, or some mixture of the

two, more effective at promoting higher-order thinking?

Are live presentations more effective than videos? Are face-to-face

discussions more effective than on-line discussions? These

are questions that need to be answered as we advance into

the technological age of education. Another important research

area concerns the questions, exercises, and problems in

a course. Research questions that might be studied here

area include: What is the most effective proportion of each

category of thinking level? For example, a large proportion

of evaluation questions (level 6) might be detrimental because,

given the hierarchical nature of the taxonomy, students

may not be adequately prepared to successfully address questions

at the highest level. What is the most effective way of

sequencing the question levels for a given unit in the study

guide? For example, would higher-level thinking be more

effectively promoted by having students answer all rote

questions (level 1) first, then all comprehension questions

(level 2) next, etc., or would interspersing the levels

be more effective? The information obtained by research

on these issues would likely be applicable to courses taught

with various other methods, not just those taught using

CAPSI. The social milieu in of a CAPSI-taught course also

provides a rich source of variables that may have an impact

on higher-order thinking. In that milieu are the students,

acting both as learners and as peer reviewers, and the instructor

and (if there is one) teaching assistant who oversee the

system and perform evaluative and feedback functions. Research

shows that, overall, students perform their peer-reviewing

duties effectively (Martin, Pear & Martin, in press

a) and that there is a large amount of compliance with feedback

that students as learners receive from other students as

peer reviewers, and from the instructor (Martin, Pear, &

Martin, in press b)). There is, however, considerable room

for improvement, and research is in progress on this. Analysis

of archived data shows that students in a CAPSI-taught course

receive much more substantive feedback on their work than

would be possible in a course taught by traditional methods.

This interactive nature of CAPSI fits a social constructivist

model of knowledge generation through interaction with others

(Pear & Crone-Todd, in press). Much of the knowledge

generation occurs on the part of the peer reviewers, who

find (often to their surprise) that reading other students

answers or solutions and commenting on them initiates their

own learning and higher-order thinking. Another important

area of study, therefore, is on the effects of the peer-review

component of CAPSI in the development of higher-order thinking.

References

References

Bloom, B. S. (1956). Taxonomy

of educational objectives: Cognitive and affective domains.

New York: David McKay.Crone-Todd, D. E. (2001). Increasing

the levels at which undergraduate students answer questions

in computer-aided personalized system of instruction courses.

PhD thesis submitted to the University of Manitoba.Crone-Todd,

D. E., Pear, J. J., & Read, C. N. (2000). Operational

definitions of higher-order thinking objectives at the

post-secondary level. Academic Exchange Quarterly,

4, 99-106.Keller, F. S. (1968). "Good-bye Teacher...".

Journal of Applied Behaviour Analysis, 5,

79-89. Kotte, J. L., & Schuster, D. H. (1990). Developing

tests for measuring Bloom’s learning outcomes. Psychological

Reports, 66, 27-32. Kulik, C.-L., Kulik, J.

A., & Bangert-Drowns, R. L. (1990). Effectiveness

of mastery learning programs: A meta-analysis. Review

of Educational Research, 60, 265-299. Martin,

T. L., Pear, J. J., & Martin, G. L. (in press a).

Analysis of proctor marking accuracy in a computer-aided

personalized system of instruction course. Journal

of Applied Behavior Analysis. Martin, T. L., Pear,

J. J., & Martin, G. L. (in press b). Proctor feedback

and its effectiveness in a computer-aided personalized

system of instruction course. Journal of Applied Behavior

Analysis. Pear, J. J., & Crone-Todd, D. E. (1999).

Personalized system of instruction in cyberspace. Journal

of Applied Behavior Analysis, 32, 205-209.

Pear, J. J., & Crone-Todd, D. E. (in press). A social

constructivist approach to computer-mediated instruction.

Computers & Education. Pear, J. J., Crone-Todd,

D. E., Wirth, K., & Simister, H. (in press). Assessment

of thinking level in students' answers. Academic Exchange

Quarterly.

[1]

Paper to be presented at the 13th International

Conference on College Teaching and Learning. Jacksonville,

Florida. April 9-13, 2002.

|

|

|